Scientists believe the environment immediately surrounding a black hole is tumultuous, featuring hot magnetized gas that spirals in a disk at tremendous speeds and temperatures. Astronomical observations show that within such a disk, mysterious flares occur up to several times a day, temporarily brightening and then fading away.

Now a team led by Caltech scientists has used telescope data and an artificial intelligence (AI) computer-vision technique to recover the first three-dimensional video showing what such flares could look like around SagittariusA* (Sgr A*) the supermassive black hole at the heart of our own Milky Way galaxy.

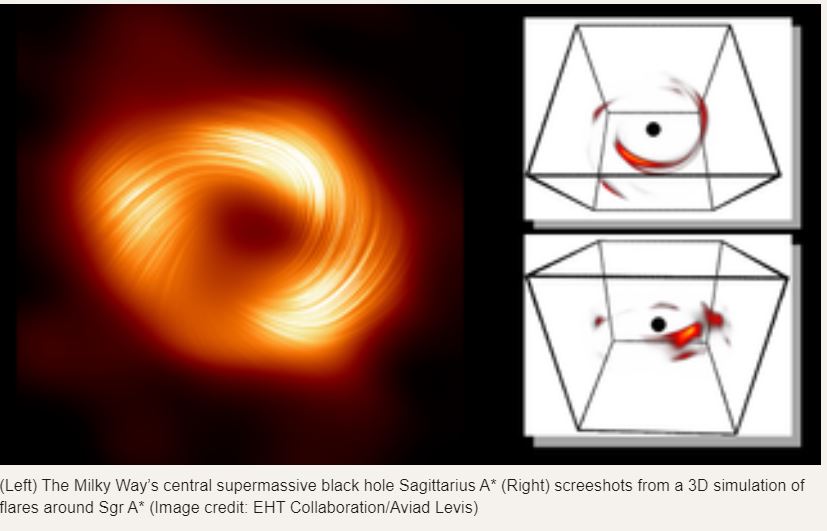

The 3D flare structure features two bright, compact features located about 75 million kilometers (or half the distance between Earth and the sun) from the center of the black hole. It is based on data collected by the Atacama Large Millimeter Array (ALMA) in Chile over a period of 100 minutes directly after an eruption seen in Xray data on April 11, 2017.

“This is the first three-dimensional reconstruction of gas rotating close to a black hole,” says Katie Bouman, assistant professor of computing and mathematical sciences, electrical engineering and astronomy at Caltech, whose group led the effort described in a paper in Nature Astronomy titled “Orbital Polarimetric Tomography of a Flare Near the Sagittarius A* Supermassive Black Hole.”

Aviad Levis, a postdoctoral scholar in Bouman’s group and lead author of the paper, emphasizes that while the video is not a simulation, it is also not a direct recording of events as they took place. “It is a reconstruction based on our models of black hole physics. There is still a lot of uncertainty associated with it because it relies on these models being accurate,” he says.

Using AI informed by physics to figure out possible 3D structures

To reconstruct the 3D image, the team had to develop new computational imaging tools that could, for example, account for the bending of light due to the curvature of space-time around objects of enormous gravity, such as a black hole.

The multidisciplinary team first considered if it would be possible to create a 3D video of flares around a black hole in June 2021. The Event Horizon Telescope (EHT) Collaboration, of which Bouman and Levis are members, had already published the first image of the supermassive black hole at the core of a distant galaxy, called M87, and was working to do the same with EHT data from Sgr A*.

Pratul Srinivasan of Google Research, a co-author of the new paper, was at the time visiting the team at Caltech. He had helped develop a technique known as neural radiance fields (NeRF) that was then just starting to be used by researchers; it has since had a huge impact on computer graphics. NeRF uses deep learning to create a 3D representation of a scene based on 2D images. It provides a way to observe scenes from different angles, even when only limited views of the scene are available.

The team wondered if, by building on these recent developments in neural network representations, they could reconstruct the 3D environment around a black hole. Their big challenge: From Earth, as anywhere, we only get a single viewpoint of the black hole.

The team thought that they might be able to overcome this problem because gas behaves in a somewhat predictable way as it moves around the black hole. Consider the analogy of trying to capture a 3D image of a child wearing an inner tube around their waist.

To capture such an image with the traditional NeRF method, you would need photos taken from multiple angles while the child remained stationary. But in theory, you could ask the child to rotate while the photographer remained stationary taking pictures.

The timed snapshots, combined with information about the child’s rotation speed, could be used to reconstruct the 3D scene equally well. Similarly, by leveraging knowledge of how gas moves at different distances from a black hole, the researchers aimed to solve the 3D flare reconstruction problem with measurements taken from Earth over time.

With this insight in hand, the team built a version of NeRF that takes into account how gas moves around black holes. But it also needed to consider how light bends around massive objects such as black holes. Under the guidance of co-author Andrew Chael of Princeton University, the team developed a computer model to simulate this bending, also known as gravitational lensing.

With these considerations in place, the new version of NeRF was able to recover the structure of orbiting bright features around the event horizon of a black hole. Indeed, the initial proof-of-concept showed promising results on synthetic data.

A flare around Sgr A* to study

But the team needed some real data. That’s where ALMA came in. The EHT’s now famous image of Sgr A* was based on data collected on April 6–7, 2017, which were relatively calm days in the environment surrounding the black hole. But astronomers detected an explosive and sudden brightening in the surroundings just a few days later, on April 11.

When team member Maciek Wielgus of the Max Planck Institute for Radio Astronomy in Germany went back to the ALMA data from that day, he noticed a signal with a period matching the time it would take for a bright spot within the disk to complete an orbit around Sgr A. The team set out to recover the 3D structure of that brightening around Sgr A.

ALMA is one of the most powerful radio telescopes in the world. However, because of the vast distance to the galactic center (more than 26,000 light-years), even ALMA does not have the resolution to see Sgr A*’s immediate surroundings. What ALMA measures are light curves, which are essentially videos of a single flickering pixel, which are created by collecting all of the radio-wavelength light detected by the telescope for each moment of observation.

Recovering a 3D volume from a single-pixel video might seem impossible. However, by leveraging an additional piece of information about the physics that are expected for the disk around black holes, the team was able to get around the lack of spatial information in the ALMA data.

Strongly polarized light from the flares provided clues

ALMA doesn’t just capture a single light curve. In fact, it provides several such “videos” for each observation because the telescope records data relating to different polarization states of light. Like wavelength and intensity, polarization is a fundamental property of light and represents which direction the electric component of a light wave is oriented with respect to the wave’s general direction of travel.

“What we get from ALMA is two polarized single-pixel videos,” says Bouman, who is also a Rosenberg Scholar and a Heritage Medical Research Institute Investigator. “That polarized light is actually really, really informative.”

Recent theoretical studies suggest that hot spots forming within the gas are strongly polarized, meaning the light waves coming from these hot spots have a distinct preferred orientation direction. This is in contrast to the rest of the gas, which has a more random or scrambled orientation. By gathering the different polarization measurements, the ALMA data gave the scientists information that could help localize where the emission was coming from in 3D space.

Introducing orbital polarimetric tomography

To figure out a likely 3D structure that explained the observations, the team developed an updated version of its method that not only incorporated the physics of light bending and dynamics around a black hole but also the polarized emission expected in hot spots orbiting a black hole. In this technique, each potential flare structure is represented as a continuous volume using a neural network.

This allows the researchers to computationally progress the initial 3D structure of a hotspot over time as it orbits the black hole to create a whole light curve. They could then solve for the best initial 3D structure that, when progressed in time according to black hole physics, matched the ALMA observations.

The result is a video showing the clockwise movement of two compact bright regions that trace a path around the black hole. “This is very exciting,” says Bouman. “It didn’t have to come out this way. There could have been arbitrary brightness scattered throughout the volume. The fact that this looks a lot like the flares that computer simulations of black holes predict is very exciting.”

Levis says that the work was uniquely interdisciplinary: “You have a partnership between computer scientists and astrophysicists, which is uniquely synergetic. Together, we developed something that is cutting edge in both fields—both the development of numerical codes that model how light propagates around black holes and the computational imaging work that we did.”

The scientists note that this is just the beginning for this exciting technology. “This is a really interesting application of how AI and physics can come together to reveal something that is otherwise unseen,” says Levis. “We hope that astronomers could use it on other rich time-series data to shed light on complex dynamics of other such events and to draw new conclusions.” https://www.caltech.edu/about/news/ai-and-physics-combine-to-reveal-the-3d-structure-of-a-flare-erupting-around-a-black-hole

Recent Comments