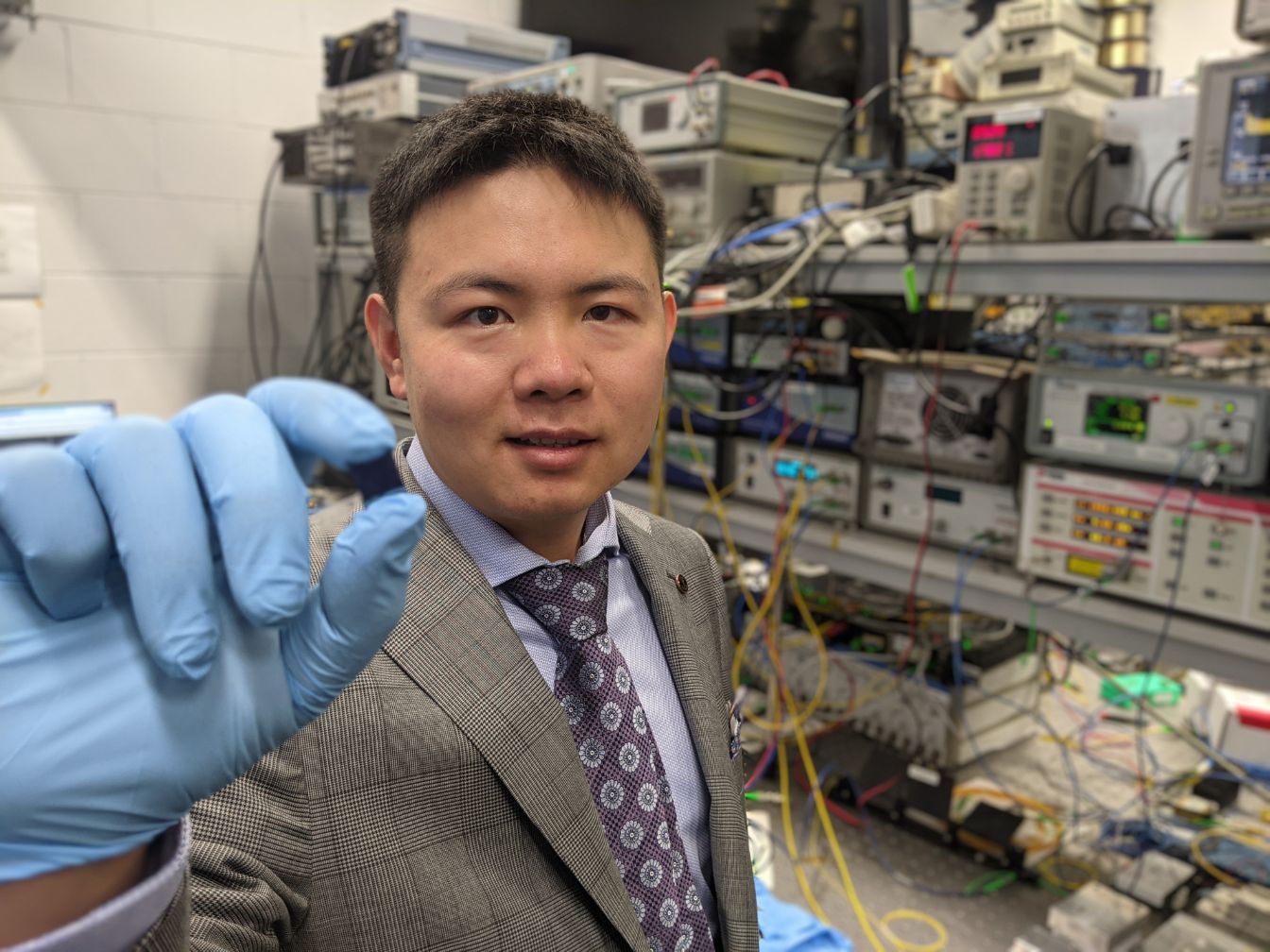

IMAGE: JENNIFER MCCANN/PENN STATE

As more private data is stored and shared digitally, researchers are exploring new ways to protect data against attacks from bad actors. Current silicon technology exploits microscopic differences between computing components to create secure keys, but artificial intelligence (AI) techniques can be used to predict these keys and gain access to data. Now, Penn State researchers have designed a way to make the encrypted keys harder to crack.

Led by Saptarshi Das, assistant professor of engineering science and mechanics, the researchers used graphene — a layer of carbon one atom thick — to develop a no...

Read More

Recent Comments