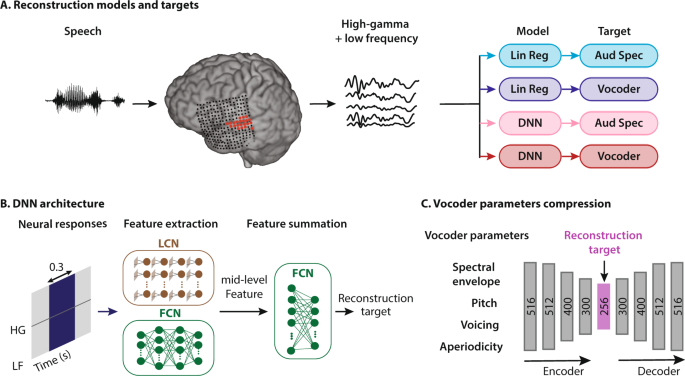

Researchers at the USC Viterbi School of Engineering are using generative adversarial networks (GANs) — technology best known for creating deepfake videos and photorealistic human faces — to improve brain-computer interfaces for people with disabilities.

In a paper published in Nature Biomedical Engineering, the team successfully taught an AI to generate synthetic brain activity data. The data, specifically neural signals called spike trains, can be fed into machine-learning algorithms to improve the usability of brain-computer interfaces (BCI).

BCI systems work by analyzing a person’s brain signals and translating that neur...

Read More

Recent Comments