Large language models (LLMs), such as Open AI’s renowned conversational platform ChatGPT, have recently become increasingly widespread, with many internet users relying on them to find information quickly and produce texts for various purposes. Yet most of these models perform significantly better on computers, due to the high computational demands associated with their size and data processing capabilities.

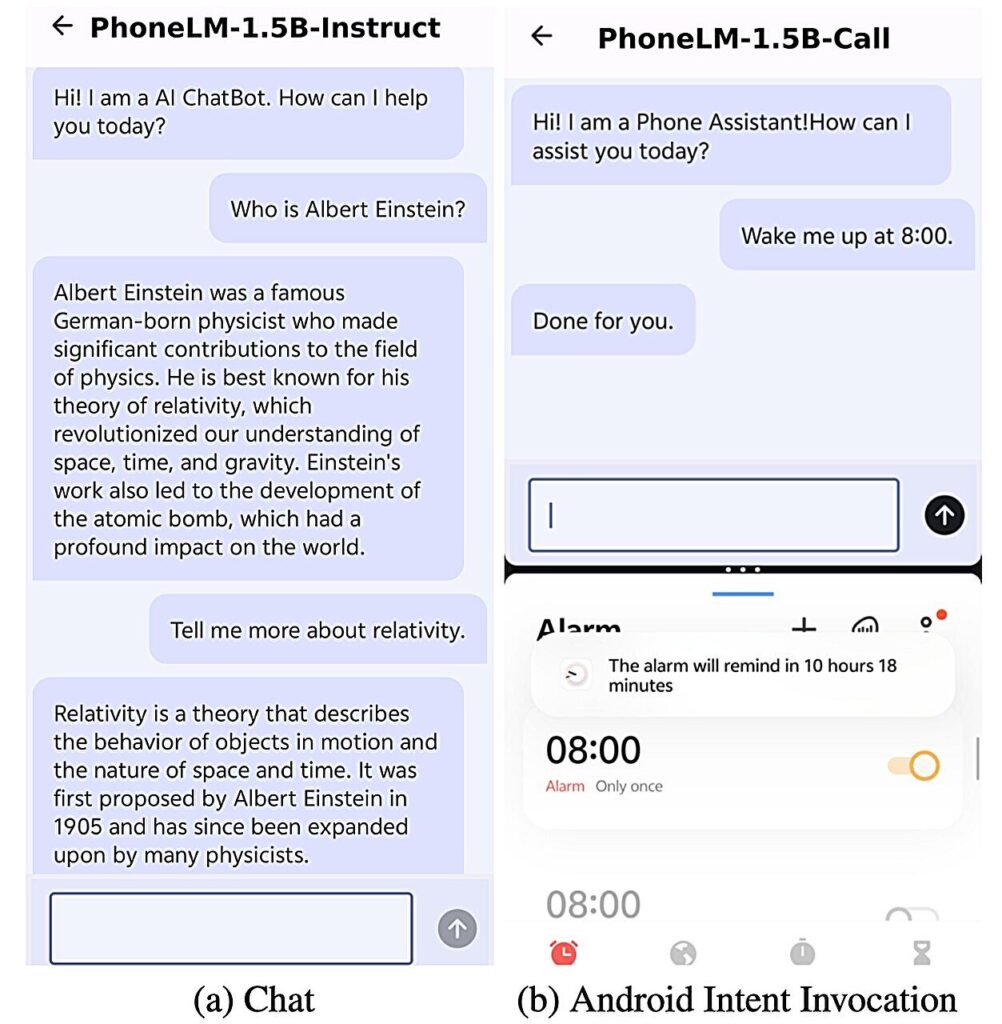

To tackle this challenge, computer scientists have also been developing small language models (SLMs), which have a similar architecture but are smaller...

Read More

Recent Comments