You’re running late at the airport and need to urgently access your account, only to be greeted by one of those frustrating tests—”Select all images with traffic lights” or “Type the letters you see in this box.” You squint, you guess, but somehow you’re wrong. You complete another test but still the site isn’t satisfied.

“Your flight is boarding now,” the tannoy announces as the website gives you yet another puzzle. You swear at the screen, close your laptop and rush towards the gate.

Now, here’s a thought to cheer you up: Bots are now solving these puzzles in milliseconds using artificial intelligence (AI). How ironic. The tools designed to prove we’re human are now obstructing us more than the machines they’re supposed to be keeping at bay.

Welcome to the strange battle between bot detection and AI, which is set to get even more complicated in the coming years as technology continues to improve. So what does the future look like?

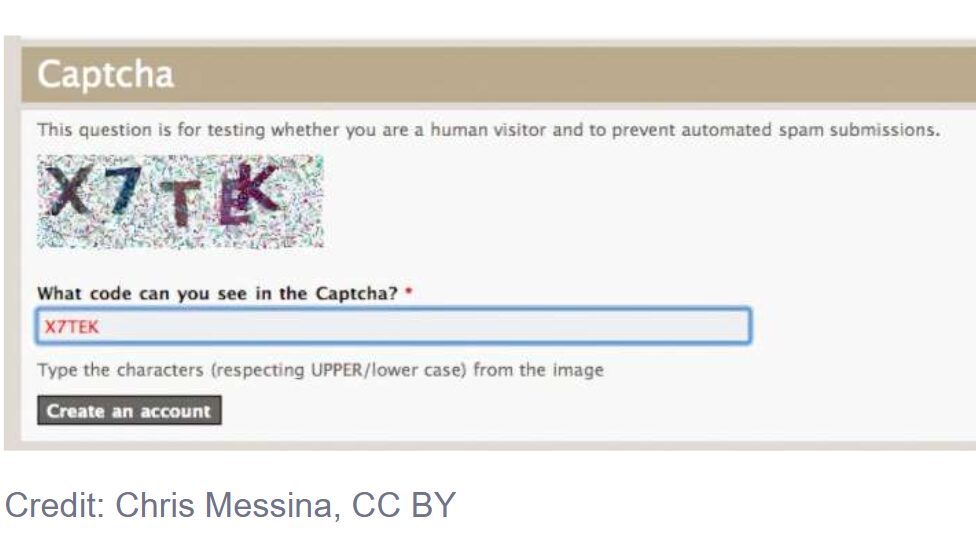

Captcha, which stands for Completely Automated Public Turing test to tell Computers and Humans Apart, was invented in the early 2000s by a team of computer scientists at Carnegie Mellon University in Pittsburgh. It was a simple idea: Get internet users to prove their humanity via tasks they can easily complete, but which machines find difficult.

Machines were already causing havoc online. Websites were flooded with bots doing things like setting up fake accounts to buy up concert tickets, or posting automated comments to market fake Viagra or to entice users to take part in scams. Companies needed a way to stop this pernicious activity without losing legitimate users.

The early versions of Captcha were basic but effective. You’d see wavy, distorted letters and type them into a box. Bots couldn’t “read” the text the way humans could, so websites stayed protected.

This went through several iterations in the years ahead: ReCaptcha was created in 2007 to add a second element in which you had to also key in a distorted word from an old book.

Then in 2014—by now acquired by Google—came reCaptcha v2. This is the one that asks users to tick the “I am not a robot” box and often choose from a selection of pictures containing cats or bicycle parts, or whatever. Still the most popular today, Google gets paid by companies who use the service on their website.

How AI has outgrown the system

Today’s AI systems can solve the challenges these Captchas rely on. They can “read” distorted text, so that the wavy or squished letters from the original Captcha tests are easy for them. Thanks to natural language processing and machine learning, AI can decode even the messiest of words.

Similarly, AI tools such as Google Vision and OpenAI’s Clip can recognize hundreds of objects faster and more accurately than most humans. If a Captcha asks an AI to click all the buses in a picture selection, they can solve it in fractions of a second, whereas it might take a human 10 to 15 seconds.

This isn’t just a theoretical problem. Consider driving tests: waiting lists for tests in England are many months long, though you can get a much faster test by paying a higher fee to a black-market tout. The Guardian reported in July that touts commonly used automated software to book out all the test slots, while swapping candidates in and out to fit their ever-changing schedules.

In an echo of the situation 20 years ago, there are similar issues with tickets for things such as football matches. The moment tickets become available, bots overwhelm the system—bypassing Captchas, purchasing tickets in bulk and reselling them at inflated prices. Genuine users often miss out because they can’t operate as quickly.

Similarly, bots attack social media platforms, e-commerce websites and online forums. Fake accounts spread misinformation, post spam or grab limited items during sales. In many cases, Captcha is no longer able to stop these abuses.

What’s happening now?

Developers are continually coming up with new ways to verify humans. Some systems, like Google’s ReCaptcha v3 (introduced in 2018), don’t ask you to solve puzzles anymore. Instead, they watch how you interact with a website. Do you move your cursor naturally? Do you type like a person? Humans have subtle, imperfect behaviors that bots still struggle to mimic.

Not everyone likes ReCaptcha v3 because it raises privacy issues—plus the web company needs to assess user scores to determine who is a bot, and the bots can beat the system anyway. There are alternatives that use similar logic, such as “slider” puzzles that ask users to move jigsaw pieces around, but these too can be overcome.

Slider Captcha:

Some websites are now turning to biometrics to verify humans, such as fingerprint scans or voice recognition, while face ID is also a possibility. Biometrics are harder for bots to fake, but they come with their own problems—privacy concerns, expensive tech and limited access for some users, say because they can’t afford the relevant smartphone or can’t speak because of a disability.

The imminent arrival of AI agents will add another layer of complexity. It will mean we increasingly want bots to visit sites and do things on our behalf, so web companies will need to start distinguishing between “good” bots and “bad” bots. This area still needs a lot more consideration, but digital authentication certificates are proposed as one possible solution.

In sum, Captcha is no longer the simple, reliable tool it once was. AI has forced us to rethink how we verify people online, and it’s only going to get more challenging as these systems get smarter. Whatever becomes the next technological standard, it’s going to have to be easy to use for humans, but one step ahead of the bad actors.

So the next time you find yourself clicking on blurry traffic lights and getting infuriated, remember you’re part of a bigger fight. The future of proving humanity is still being written, and the bots won’t be giving up any time soon. https://techxplore.com/news/2024-12-human-bot-longer-ai-agents.html

Recent Comments